At a time when Amazon already achieves 35% of its turnover thanks to the algorithmic recommendation and launches two new personalisation tools (Discover and Showroom), Gartner announces the end of personalisation algorithms by 2025. This ambiguous announcement seems to deserve a more in-depth analysis.

Contents

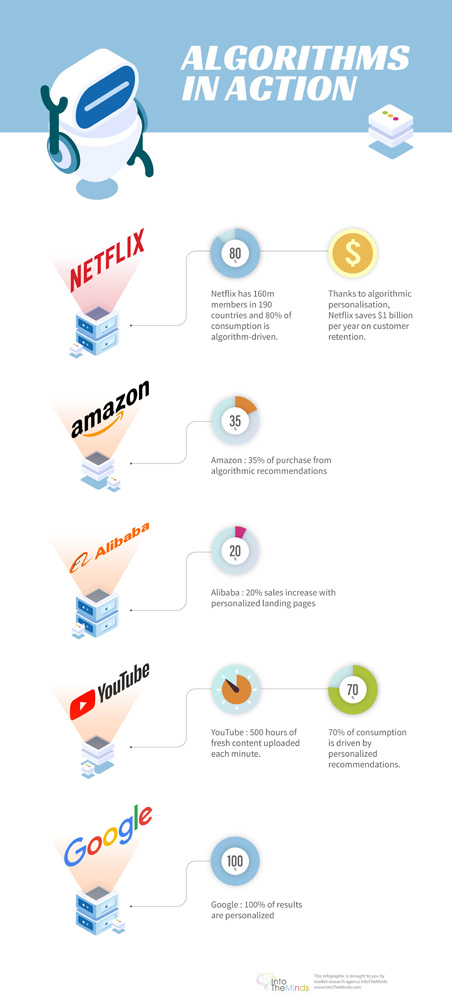

- Infographics on personalisation algorithms

- The purpose of algorithmic personalisation

- Why abandon personalisation?

- Problem #1: data collection and integration

- Problem #2: ROI

- Conclusions

Infographics

What is the purpose of algorithmic personalisation?

Personalisation algorithms have invaded our digital lives. The first of them (Google) personalises our results on its search engine. While 500 hours of content are uploaded every minute on YouTube, the recommendation algorithms of this platform suggest which content might interest us and even starts its playback automatically. The recommendation is particularly useful in the world of media platforms (it is estimated that 70-80% of Netflix consumption comes from recommendation algorithms), but it doesn’t stop there. In the world of e-commerce algorithms are omnipresent (Amazon, for example, gets 35% of its turnover from these algorithms in which it has been investing since 1994), in B2B virtual assistants are also in full development to suggest to salespeople (car, insurance, …) what to sell to their customers. They, therefore, allow the relationship between the salesperson and the customer to be personalised.

Why would marketers abandon the personalisation?

Algorithmic personalisation has been a hot topic since it has spread to all digital aspects of our lives, especially in media consumption. Eli Pariser invented the concept of filter bubbles, which we have talked a lot about on this blog (see for example here our proposal for filter bubble classification). Even if their existence is still under discussion and a new definition is needed, some companies are taking the opposite side of this concept, such as HBO with these human recommendations (service and site unavailable in most countries; see the video below).

But Gartner’s thesis is based on entirely different arguments, purely economic.

- Personal data are increasingly difficult to obtain.

- Marketers would be disappointed by the return on investment of algorithmic personalisation.

On the first point, we can’t fault Gartner. In particular, in Europe, the GDPR has put a stop to specific practices. If the benefit of the GDPR for the consumer is still debatable (see our study on the subject), it is clear that companies have become much more cautious than before. A major clean-up was also carried out before the GDPR came into force, resulting in the wiping out of any personal data for which consent had not been documented.

The second point calls for more in-depth comment, as Gartner’s position seems difficult to defend at first sight. According to Gartner, 80% of marketers will abandon their personalisation efforts by 2025 for two main reasons:

- insufficient return on investment

- the difficulty of collecting and integrating personal data

Problem 1: Data collection and integration

On this point, we can only agree with Gartner. What we observe with our customers is indeed a great difficulty to integrate the data. The lack of a solid foundation and in particular the absence of a single client repository (CRU) leads many companies to attempt the impossible: reconciling disparate databases. This leads to costs for the development of specific systems that are difficult to maintain. This is why we have been advocating for several years the use of first-party data only — a position we defended once again at the annual BAM conference (read the detailed position paper we have published on the occasion of the BAM congress).

Our advice

Instead of stubbornly collecting and integrating weak quality data (remember the adage “shit in, shit out”), dare to start from scratch. And above all, make sure you put in place the following four principles to develop the trust of your clients/users to collect more data:

- Educate your users about the use and value of their data.

- Give them back control

- Rely only on first-party data

- Build trust gradually

Problem #2 : insufficient ROI

Insufficient ROI can be caused by various factors: benefits too low or costs too high. In our opinion, the costs that are too high are mainly due to the development (and maintenance) efforts that have to be made. Whether you buy a personalisation system “on the shelves” or develop one yourself, there is no such thing as a personalisation system that can be integrated overnight.

If the benefits are too low, you have to ask yourself whether the goals you set are realistic and how you measure your efforts. Too often we find that too ambitious goals are set and that in parallel no suitable measurement method (A/B testing, for example) is available. ROI should be measured against KPIs (Key Performance Indicators) that can be influenced by the personalisation algorithms. On the other hand, since adjustments are essential to achieve the objectives, the personalisation system must allow for them. Off-the-shelf” algorithms, while meeting specific needs, can be limited when it comes to adapting to specific targets or data.

In the end, we agree with Gartner. Disappointment is often great among those who try personalisation algorithms. But it is not the system that is to blame. It is the people who choose and implement it. Their lack of experience and inexperience in implementing such systems leads them to engage in projects that may not be profitable in the long run.

In conclusion: the end of personalisation is not for tomorrow

The arguments put forward by Gartner make sense and correspond to the reality of many situations we have encountered. To sum up, we can say that on the one hand, companies place disproportionate expectations on personalisation algorithms; on the other hand, they do not have the minimum basis necessary to ensure that the right data is collected optimally and that it can be consolidated.

This will inevitably lead to the halt of many personalisation projects among companies that have embarked on such projects without being prepared for them. But this in no way means that the personalisation market will shrink. On the contrary, the ever-faster digitalisation of our lives, the production of ever-larger volumes of data, will reinforce the need for ever more personalisation in the interactions between companies and their customers. We will therefore only witness the decline of projects launched on the wrong basis. In contrast, other marketers, more aware of the technical and functional prerequisites, will launch parallel projects that will bear fruit.

SEO and personnalisation algorithms

Recommendation algorithms are at the heart of personnalisation on the web. Good SEO agencies try to understand the mechanisms of those algorithms and the signals used to change the ranking. Unfortunately the “signals” used to derive the position in the SERP (Search Engine Results Page) change frequently which requires an empiric approach. I quite agree with the 5-step approach proposed by this SEO agency :

- Plan

- Analyse

- Create

- Promote

- Report

Posted in big data, Marketing.