In a previous article, I highlighted the importance of processing speed when choosing a data preparation solution (ETL). I made a first benchmark between Alteryx, Tableau Prep, and Anatella on a file of 108 million lines. This time I repeated the operation on 1.039 billion lines and added Talend to the benchmark. The results are unexpected since the processing speeds vary by a factor of 1 to 20.

TEASER : stay tuned. In my next article I’ll show you a trick to increase the speed of processing by a factor 10.

crédits : Shutterstock

Introduction

In terms of data preparation, speed is, in my opinion, one of the differentiating factors from “ETL” type solutions. Many data preparation operations are always done with files extracted from information systems. However, handling large files can quickly make the data preparation work very laborious.

In my first test, I showed a 39% difference in processing time between Alteryx and Anatella for a file of 108 million lines. The processing was straightforward: open a flat-file (CSV) and sort on the first column. Here, we go to the next level with a flat-file of one billion lines (almost 50 Gb!).

crédits : Shutterstock

Methodology

For this test, I prepared a .CSV file with 1.039 billion lines and 9 columns. The file “weighed” 48 Gb and I stored everything on a standard hard disk.

- I developed a straightforward data preparation process consisting of 3 steps:

- opening the CSV file

- descending sort on the first column

- group by the values of the 7th column (which only contained 0 and 1)

For this benchmark, I compared 4 well-known data preparation solutions:

- Talend Open Studio v7.3.1

- Anatella v2.35

- Tableau Prep 2020.2.1

- Alteryx 2020.1

I performed all of the tests on a desktop machine equipped with 96 GB of Ram and a 7th generation i7 processor.

The data was stored on a 6TB Western Digital HDD.

The ETL solution at the top of the podium is 19 times faster than the one at the bottom.

Results

The results are pretty surprising and totally unexpected for me. I performed tests on smaller data sets, and I observe that the processing time is not linear.

| Solution | Processing time (seconds) |

| Alteryx | 2290 |

| Talend | 13954 |

| Anatella | 730 |

| Tableau Prep | 2526 |

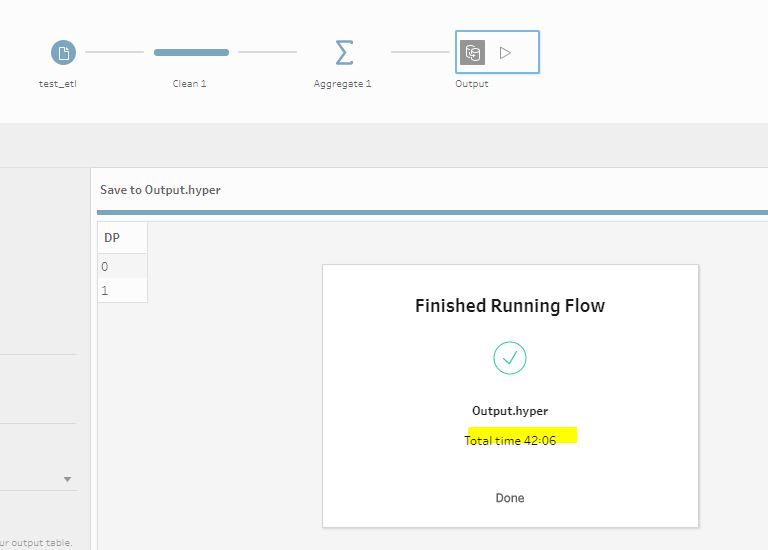

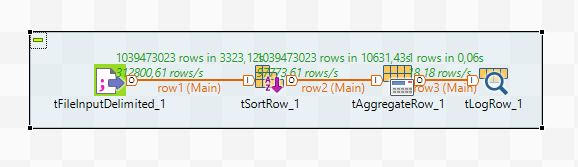

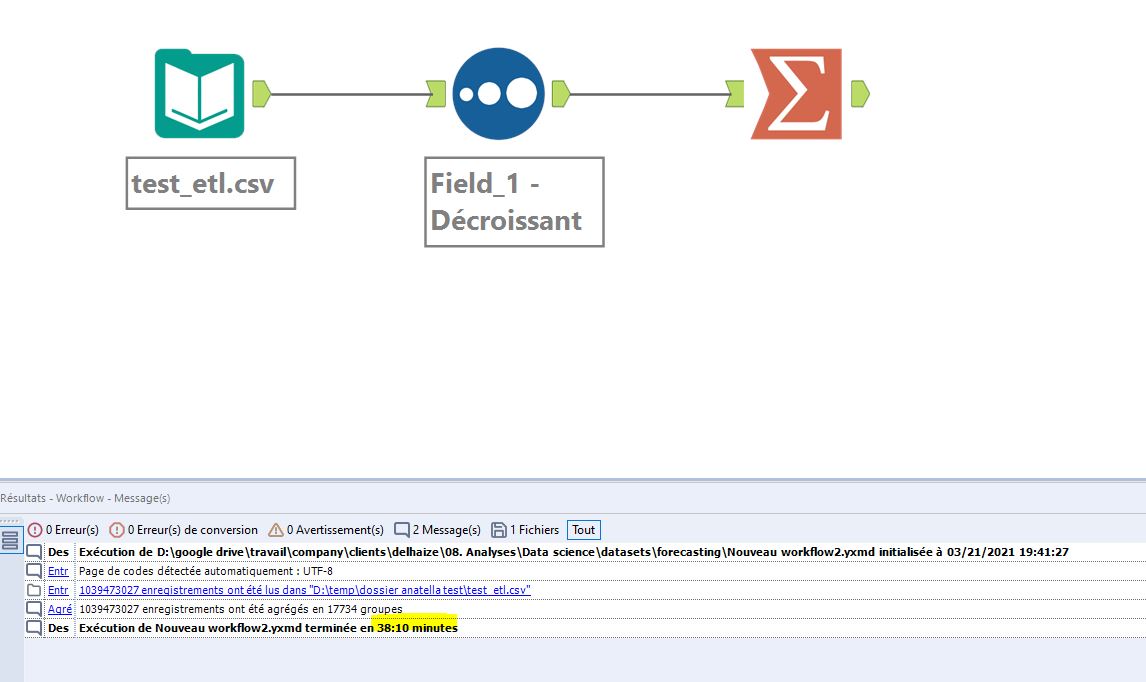

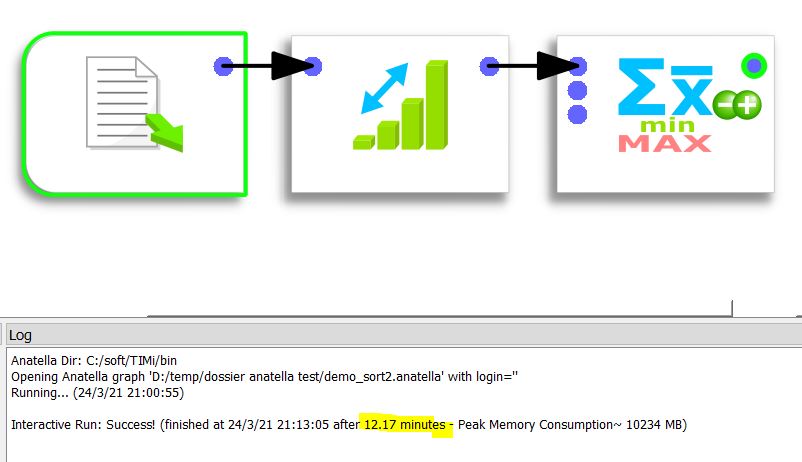

You’ll find below the screenshots of the different pipelines and the associated processing times.

The results of this test are surprising since we can see huge differences in similar processes.

The best performing solution is Anatella v2.35, proposed by Timi. The processing is done in only 730 seconds.

The slowest perfoming solution is Talend Open Studio v7.3.1 which takes more than 4 hours to process the same dataset. Talend Studio is, therefore, 19 times slower than Anatella! Imagine the poor data scientist who has to work with Talend Studio. In the morning, he starts processing his data, and he starts working on it when he comes back from his lunch break around 1 pm.

The 2nd and 3rd places in the ranking are occupied respectively by Alteryx and Tableau Prep, whose performances are separated by only +/- 4 minutes.

crédits : Shutterstock

Conclusion

The differences are huge between the different solutions tested. We are talking about a factor of 20 between the best-performing solution and the one that performed the worst.

This highlights the importance of processing speed in the data preparation process. Solutions that work in “No Code” mode are far from being optimized in the same way. Therefore, the work of the data scientist can be strongly impacted and, as a consequence, slowed down.

However, there are solutions to speed up processing (use of an owner format as input, storage of data on an SSD rather than on an HDD). They will be the subject of a future test, which will highlight the progress margins of each of the ETLs tested

Posted in big data.