Participating at conferences has the positive aspect that you force your capacity to be astonished, you confront new subjects and meet new people. The “Media Fast Forward” event organised on 14 December 2018 by the VRT (the Belgian Dutch-speaking public radio and television) is a striking melting pot of the best in innovation and research in the media sector.

In addition to the many conferences that were organised in parallel from 10am to 4.30pm, there were also demonstrations of innovative products by several dozen start-ups gathered in the BOZAR main hall, the Palais des Beaux-Arts in Brussels, well known for hosting the finals of the Queen Elisabeth Competition. Seven series of conferences were organised in parallel in the different areas of Bozar.

My interest in artificial intelligence first led me to the session on journalism and algorithms (entitled “news and algorithms”) during which Ike Picone (University Libre de Bruxelles), Maarteen Schenk (Trendolizer), Philippe Petitpont (Newbridge) and Judith Möller (University of Amsterdam) participated.

The propagation mechanisms of Fake News

Ike Picone tackled the subject of Fake News by analysing the dynamics that make them spread. He proposed a 3-step procedure that I summarise in my own way below:

- Get attention through strategies that promote dopamine production

I found the report on physiological reactions quite attractive. What Ike Picone is saying here is that you have to play on emotions to provoke this dopamine discharge; otherwise, the human mind is not stimulated enough to cause a reaction that promotes the spread of fake news. - Spread the fake news

Two types of fake news compete here: those that are politically motivated and those that are manufactured for commercial reasons. - Fuel the tensions

The last step, of course, is to fuel the tensions by pressing where the emotions are strongest (what Ike Picone called the “hot button”) and targeting a community (online) within which to keep the controversy alive and spreading.

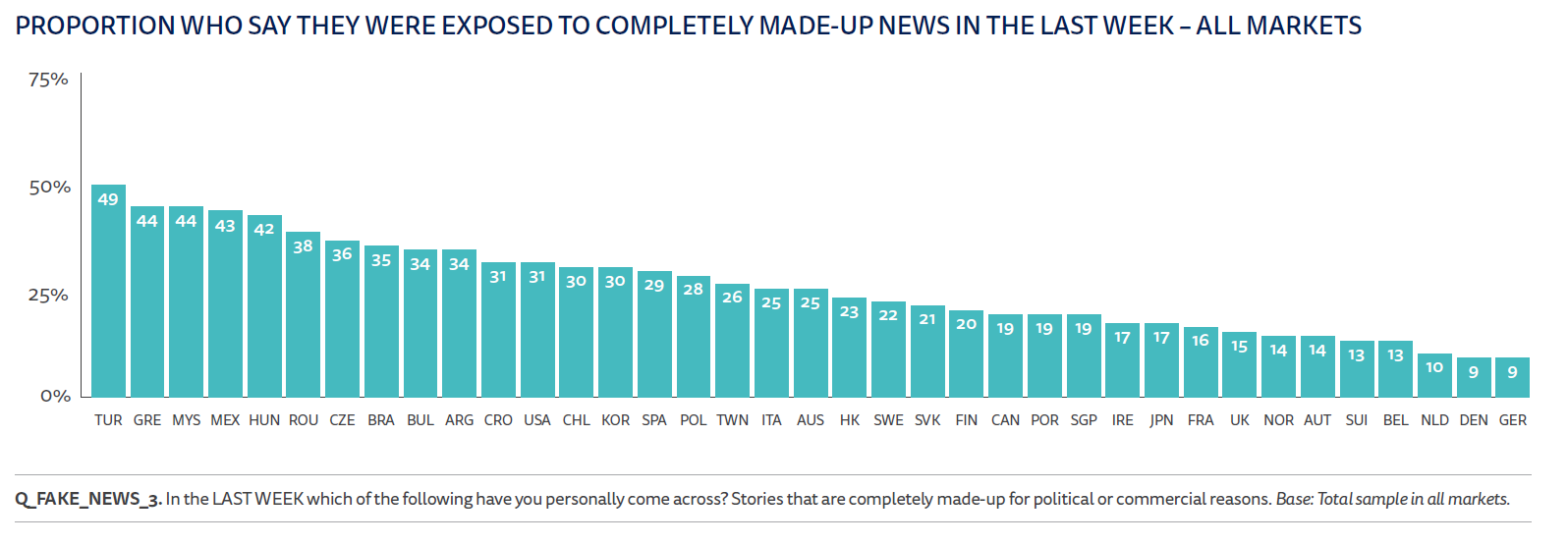

If fake news has existed since words were spoken, the problem has probably never been as big as in the last 18-24 months. The latest Reuters Digital Report largely reflects this phenomenon that politicians on all sides are now seeking to regulate (which is both pathetic and ironic since the political class’s ignorance of the technological aspects underlying Fake News is compounded by its interest in using them to satisfy purely political agendas).

Recommendation algorithms, filter bubbles and diversity

A presentation by Judith Möller (University of Amsterdam) returned to the subject of filter bubbles and the place of algorithmic recommendations in this context. I found it particularly relevant that the concept of “diversity” should be put back into the centre of the debate and that different forms of diversity should be assessed. While I will necessarily have to come back in a later blog post on this exciting work, I can’t resist the pleasure of delivering conclusions that are entirely in line with my own work and research on the subject:

- algorithms ultimately produce more diversity than human editorial choice

- filter bubbles reflect our own biases and the systems that provide them are the result of them (which of course raises the problem of algorithmic governance beyond ethical issues)

As I wrote a few months ago, algorithms are not necessarily at the origin of echo chambers. It primarily interfaces designers, designers, journalists themselves who need to be made aware of this theme to achieve greater diversity.

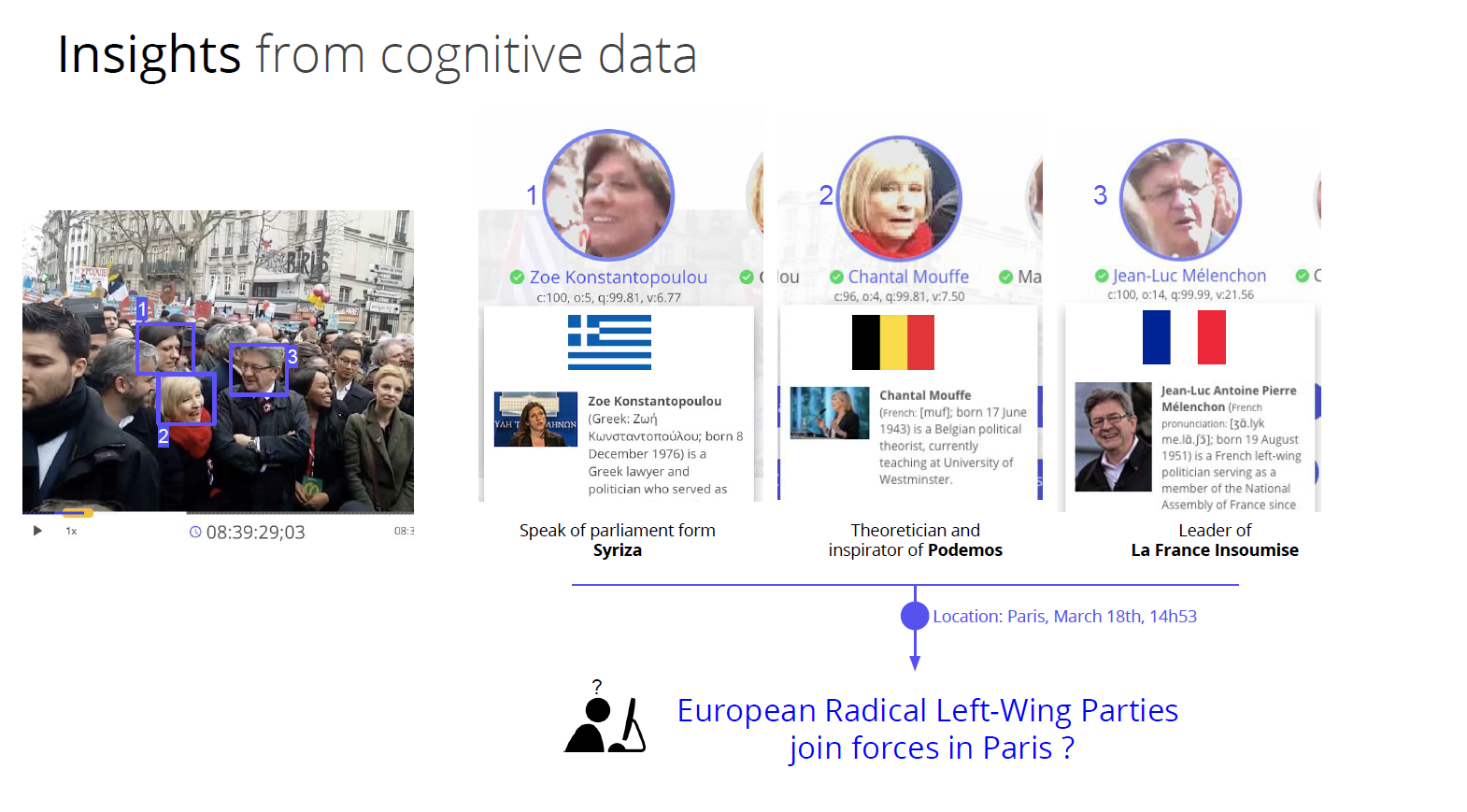

Artificial intelligence as a tool for journalists

Philippe Petitpont (Newsbridge) presented an innovative way to extract more meaning from the “rushes” produced by journalists. The different analyses that can be carried out on the videos thus facilitate journalistic work. Insights can be generated based on facial recognition. The example given of the facial recognition identification of people around Jean-Luc Mélenchon in a demonstration seemed to me to be particularly relevant and exciting in a journalistic context.

Insights proposed by Newsbridge based on facial recognition applied to video segments

Trend detection on the Internet helps to identify Fake News

To finish this part of the session, Maarten Schenk (Trendolizer) came back on his experience in detecting trendy topics on the Internet. Indeed, Trendolizer’s concept is to expose the subjects that rise, which indirectly allowed Maarten to take an interest in Fake News, whose propagation cycle closely follows that of more traditional “breaking news”.

Through examples, each more edifying than the next, Maarten demonstrated to the audience all his knowledge in the detection of Fake News. His story was reinforced by cross-examination by the BBC: on the one hand Christopher Blair, king of the Fake News (“Godfather of Fake News” as the BBC called him) and on the other Maarten who is king of the “Fact Checkers”. Don’t miss the BBC podcast on this subject.

I heartily invite you to visit Maarten’s website to get an idea of his work and thus touch with your own hand what makes the very essence of fake news.

Posted in Marketing.